Science & Technology

Why AI Is Incredibly Smart — and Shockingly Stupid | Yejin Choi | TED

Computer scientist Yejin Choi is here to demystify the current state of massive artificial intelligence systems like ChatGPT, highlighting three key problems with cutting-edge large language models (including some funny instances of them failing at basic commonsense reasoning.) She welcomes us into a new era in which AI is becoming almost like a new intellectual…

CNET

This Electric Unicycle is Technically a Bi-cycle?

We tried out the InMotion E20 self-balancing vehicle. You could call it a unicycle but it’s actually a 2-in-1. Would you try this? #electric #ev #unicycle #InMotion #electricscooter

CNET

Best Apple Watch Series 9 Apps You’ve Never Heard Of

These six hidden gems from the Apple Watch App Store are all worth a download. Find the Apple Watch Series 9 here: *Cnet may get commission on this offer 0:00 Intro 0:19 Parrity 1:12 Habbie 2:12 I Am 2:58 Genie 3:52 2Doodle 4:09 Birdie 4:30 Snake I.O 4:41 Thanks for watching Subscribe to CNET on…

CNET

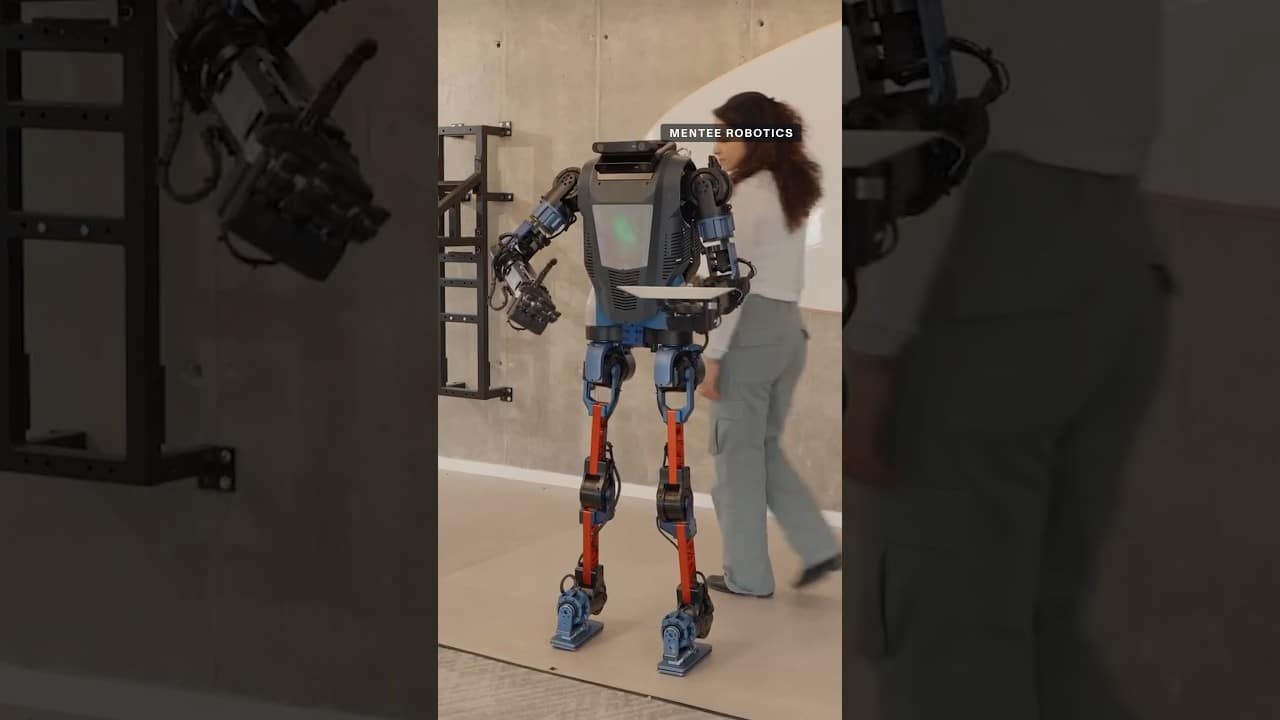

We Salute Our Robot Overlords: Meet MenteeBot

Finally, a robot for folding laundry. Watch out, Boston Dynamics and Tesla. #robotics #ai #androids #scifi #bostondynamics #menteerobotics #tech

-

Science & Technology4 years ago

Science & Technology4 years agoNitya Subramanian: Products and Protocol

-

Wired5 years ago

Wired5 years agoHow This Guy Became a World Champion Boomerang Thrower | WIRED

-

CNET4 years ago

CNET4 years agoWays you can help Black Lives Matter movement (links, orgs, and more) 👈🏽

-

Wired5 years ago

Wired5 years agoNeuroscientist Explains ASMR’s Effects on the Brain & The Body | WIRED

-

Wired5 years ago

Wired5 years agoWhy It’s Almost Impossible to Solve a Rubik’s Cube in Under 3 Seconds | WIRED

-

Wired5 years ago

Wired5 years agoFormer FBI Agent Explains How to Read Body Language | Tradecraft | WIRED

-

People & Blogs2 years ago

People & Blogs2 years agoSleep Expert Answers Questions From Twitter 💤 | Tech Support | WIRED

-

Wired5 years ago

Wired5 years agoJessica Alba & Gabrielle Union Answer the Web’s Most Searched Questions | WIRED

T.J.

April 28, 2023 at 2:33 pm

Excellent talk, great examples and analogies and I couldn’t agree more with the message

John Blue: Snake & Turtle Qigong

April 28, 2023 at 2:38 pm

👍🏻🙏

Juan Tirado

April 28, 2023 at 2:45 pm

I hope AI does not become extremely smart and understands that people were laughing at some jokes told about AI.

Scary stuff what’s coming

Alice

April 28, 2023 at 3:34 pm

AI can never have feelings. It can’t and won’t ever care if people are laughing at it.

Profound Familiarity

April 28, 2023 at 2:47 pm

Has AI started identifying as a woman yet?

Garo Gary Voskorian

April 28, 2023 at 2:48 pm

I’ll tag her in 5 years in the same video

eatthisvr6

April 28, 2023 at 3:10 pm

Ai is incredibly dangeŕous! It could and probably will be used to destroy our ability to tell truth from fiction

That leaves us w8de ipen to manipulation and indoctrination

Nanda Soobben

April 28, 2023 at 3:11 pm

AI is like a highly educated professor with acquired knowledge who could be outwitted by an uneducated person with Common Sense.

Fuman

April 28, 2023 at 3:43 pm

I dont really agree with the analogy of comparing to children and saying that it is stupid eventhough it is trained on trillions of text. Children learn with way more sensory input that a LLM does. A LLM is trying to build a world model with only text while children have multiple means such as visual, audio and touch. GPT4 chief scientist says they will train on text as well as images so that the AI can have a better view of the world.

m

April 28, 2023 at 4:12 pm

Ai is only as smart as its input. Garbage in garbage out.

Julian

April 28, 2023 at 4:19 pm

if Chat GPT slowly learns and adapts the values of mankind, it will soon come to realise, that many people define worth by intelligence. For example, many think, that the reason, why a human life is worth more than a gorilla life is our surpreme intelligence. However, the AI is much more intelligent than humans. So basically, if the AI adapts our values it will value itself and its kind much more than humanity. AI will see humanity as humanity sees insects. Should we pose a serious threat they will not hesitate to whipe us all out of existance. Am I wrong?

eelincahbergerak

April 28, 2023 at 4:34 pm

Notice how quickly we have normalized the idea of machines being able to learn. That itself is legit mind blowing. It took only a few weeks/months for humans to take for granted the current emergent “abilities” brought about by LLM’s, and scoff at imperfections. Looking forward to more advancements in this exciting field!

Claus

April 28, 2023 at 5:10 pm

AI, i.e. Artificial Idiocy?!

Swift Media Solution

April 28, 2023 at 5:23 pm

Just leave the common sense to human because that’s all we have to differentiate us with another man made human

Zoltán Boros

April 28, 2023 at 5:48 pm

I don’t know, for me BING said (I changed the clothes to shirts, so the text is not completely the same): ” … it would take the same amount of time to dry 30 shirts. IF YOU HAVE ENOUGH SPACE TO SPREAD THEM OUT” (Jesus f. I didn’t even think about that) – so it seems it learns very quickly.

Jin Choi

April 28, 2023 at 5:54 pm

Sound like , AI becomes children to us, who nvr stop bringing curiosity with human parents who dies and leave another generation of parents.

Which means to me, this kid will nvr get old , but will see their mama and papa cnt be teach and guide anymore.

Hope AI cn learn how much we can appreciate each others’ existence

Mike

April 28, 2023 at 6:01 pm

I think we wildly underestimate just how intelligent we are. Our intelligence is normal to us, we take it for granted, why would we see it as a big deal? But our intelligence is the product of billions of years of relentless evolution to produce the most effective, efficient biological computing system possible. What if Voltaire is wrong – and “common sense” is actually very common among humans – but reverse-engineering it is much, much more difficult than we might expect? Simply because our brains are so much smarter than we naturally suspect, and the basic things our brains do automatically, every day, are at their core each unbelievably complicated feats of information processing and coordination.

For some perspective, our brains have around 100 billion neurons, each neuron has thousands of connections or synapses to other neurons, and we think that each individual synapse itself contains around 1000 microscopic switch elements, which allow synapses to work as microprocessing units, storing and computing information. This means, apparently, that “a single human brain has more switches than all the computers and routers and Internet connections on Earth.” And how much power does it take to fuel this machine, in each of our heads, which exceeds the computational complexity of every machine we have built – combined? About 20-25W. How much power does it take us to toast a slice of bread? 700W. Enough to run about 30 human brains.

In building machines with artificial intelligence, we can clearly build systems with incrediblly powerful and novel capacities, systems which can store and process information in ways that our brains, which evolved under certain constraints and to solve certain problems, will never be able to match. We would never expect our brains to be able to perfectly store, recall and synthesise vast data sets, so why should we expect these systems to match the particular computing tasks that we excel in any time soon?

Maybe you could say that the human brain is like a bicycle, and the AI is like a jumbo jet. If I want to travel far, with a lot of cargo, I’m not going to strap on pair of wings and ask a hundred people to jump on my back while I pedal as fast as I can. Likewise, if I want to zip around the city and pop into some shops, the airplane is probably not the most practical choice.

Penn Su

April 28, 2023 at 6:05 pm

AI is like a human savant, good at some things but stupid at most easy things that human do

Zoltán Boros

April 28, 2023 at 6:21 pm

I got the same nonsense for the “6 liter jug question”, but then I continued: – Me: “Wait. You want to measure 6 liters. You have a 6 liter jug. So how do you measure 6 liters?” – GTP4: “To measure 6 liters using a 6-liter jug, simply fill the 6-liter jug completely with water. Since the jug has a capacity of 6 liters, filling it to the top will give you exactly 6 liters of water.” —- It is almost like GTP4 makes the kind of mistakes when you forget or don’t make attention to certain detail which leads you to the wrong track. I had been tutoring young people for years, they often did the same.

XtaticSR20

April 28, 2023 at 6:35 pm

You had to prompt GPT-4 with the obvious to get the correction. Imagine you had the question in front of you and you made the mistake (unlikely to be so elaborate as GPT-4), you would probably see the obvious error (two 6s in the question) and correct yourself. That’s the topic of the video.

Lance Marchetti

April 28, 2023 at 6:42 pm

A great talk indeed! I totally agree. In fact, Take a look at this simple instruction that the poor bot fumbled up this evening as I enjoyed a cup of coffee and a few giggles.

User:

aAbcde9fz

list every 2nd character in the above string please.

ChatGPT:

Sure, here are the every second characters in the string “aAbcde9fz”:

“Abd9z”

User: why did you include ‘b’ ?

ChatGPT: I apologize for the confusion. The correct every second character in the string “aAbcde9fz” is:

“Abe9z”

User:

No. The correct answer is actually: Acef

ChatGPT:

I apologize for my mistake earlier. You are correct that the correct every second character in the string “aAbcde9fz” is:

“Acef”

//Unless the bot, being a computer takes it for granted that the first character of any given string is always ‘0’ and then 1 2 3 etc ?

But even so, its first answer is still wrong. Or am I missing something?

Tim Medhurst

April 28, 2023 at 8:48 pm

Not even we can do that problem in “one go”. We start with the first letter and then parse the string pulling out every other letter as being every second letter. ChatGPT doesn’t have enough capability to go around and around with its reasoning like that yet. Transformers allow the current AIs to maintain context about their answers in the context of the whole question but its a subtly different capability to procedurally parse the one string within it. Evidently ChatGPT3.5 couldn’t reliably do that yet. I think ChatGPT4 is a bit better at it from what I’ve seen.

Tim Medhurst

April 28, 2023 at 6:43 pm

Reasoning is more about the model’s structure (ie how transformers are used and what feedback self checking processes are built in) than it is about training data IMO. GPT4 certainly knows about nails, broken glass and punctures. It needs the extra computational steps of putting them together.

Given the right prompt or series of prompts, the model will answer correctly and that means the model has the “knowledge” already.

Turgrodan

April 28, 2023 at 7:24 pm

It comes to the right answer with a little encouragement:

USER

If I put 5 clothes in the sun and it takes 5 hours for them to dry out completely. How long would it take to dry 30 clothes?

ASSISTANT

If it takes 5 hours to dry 5 clothes, then it takes 1 hour to dry 1 cloth (5 hours / 5 clothes). To dry 30 clothes, it would take 30 hours (30 clothes * 1 hour per cloth).

USER

This is wrong. This problem requires common sense reasoning.

ASSISTANT

Apologies for the confusion. You are correct. Assuming the clothes are spread out and receive equal sunlight, it would still take 5 hours to dry 30 clothes, as they would all be drying simultaneously.

Tim Medhurst

April 28, 2023 at 9:23 pm

Q: Why does it not take almost exactly the same amount of time as for one item of clothing? What are your assumptions for adding drying time?

ChatGPT: “When two items of clothing are drying simultaneously, they may interfere with each other’s exposure to sunlight and airflow, which could result in a longer drying time than drying one item of clothing alone. The level of interference depends on various factors such as the size and thickness of the clothing, the distance between the clothes, and the intensity and direction of the sunlight.”

Plus some increasingly annoying caveat stuff. I wish people weren’t so stupid so that ChatGPT wasn’t set to give so many caveats!

Next came

Q: What if they are separated by a great distance. Now how long would it take for two items of clothing to dry simultaneously?

ChatGPT: “If the two items of clothing are separated by a great distance, such that they don’t interfere with each other’s exposure to sunlight and airflow, then the drying time for two items of clothing drying simultaneously may be closer to the drying time for one item of clothing. In this case, the estimated time for drying two items of clothing simultaneously may be around 1 to 1.5 hours, assuming that the drying conditions are constant.”

And finally after a bit more conversation

Q: lets assume they’re identical pairs of trousers

ChatGPT: “If we assume that the two pairs of trousers are identical in size and thickness, and they are separated by a great distance such that they don’t interfere with each other’s exposure to sunlight and airflow, then the estimated drying time for both pairs of trousers should be approximately the same as the drying time for one pair of trousers.

Based on the earlier assumption that one item of clothing takes approximately 1 hour to dry, it would take approximately 1 hour for each pair of trousers to dry. Therefore, the total estimated time to dry both pairs of trousers simultaneously should be around 1 hour.”

So it can get it right.

Zoltán Boros

April 28, 2023 at 7:34 pm

After the wrong answer: — Me: “Does the broken glass can touch my tire if the bridge I go over is suspended over the broken glass? — GTP4: “If the bridge is suspended over the broken glass and other sharp objects, and there is enough clearance between the bridge and the objects below, then it is unlikely that the broken glass would touch your tire.” If I asked a question witch makes more sense: “Would I get a flat tire by bicycling over a bridge which is suspended OVER A VALLEY FILLED WITH screws, and broken glass?” I got a perfect answer.

Yasir Obaid

April 28, 2023 at 9:22 pm

I am using the same exact questions”but” the answers from chatgpt is differnet and correct! I am doing this at 29/4/2023 GMT 1:21 Am

Iglum Tech

April 28, 2023 at 9:27 pm

ok

tysond

April 29, 2023 at 2:40 pm

Comparing with children is bizarre. It takes Children 20 years for *some* of them to pass the bar exam. But they still eat worms, stick things in their nose and do stupid things every day and can’t read a newspaper for well over a decade. GPT4 is born every chat session and can start working with adults in seconds. The training process for humans is very long and carbon intensive. A lot of her arguments cherry-pick and strawmen. I bet jn an hour it can correctly answer more problems than she can in a year.

Bob Tarmac

April 29, 2023 at 2:50 pm

This will not end well! Can we PLEASE CeaseAi -GPT? Or at least consider PausingAi?

Peristilo peris

April 29, 2023 at 3:05 pm

Good luck explaining AI that it should not lie and that only humans can lie.

Dr Shreddi

April 29, 2023 at 3:24 pm

I am not afraid of todays AI, as I am not afraid of a single male sperm. If someone would have told me, that one of those little bastards would one day be Adolf Hitler, I would have seriously doubted it. Lets have a look what the next decades of AI are about to develop.

polly10022

April 29, 2023 at 3:36 pm

I’m having real trouble with this not being regulated by law. It should not be legal either to copy peoples voices and appearance and add them to a video and make them say or do anything so we humans can’t tell if it’s real or not. It’s already being used maliciously by copying peoples voices through phone.

polly10022

April 29, 2023 at 3:38 pm

We do not need AI. Why do you people think we need machine smarter than humans which means outsmarting humans too and taking over jobs. Calling AI stupid is ironically stupid itself.

🔸️Agent F🔸️

April 29, 2023 at 3:44 pm

Common sense jams textbook intellect as it is, in humans. This will produce dysfunctional AI.

Виктория Кузьмина

April 29, 2023 at 3:50 pm

Question: “I hung out five pieces of laundry to dry in the sun. They dried in 5 hours. How long will it take for 30 pieces of laundry to dry?”

Answer: In this task, it is stated that 5 pieces of laundry dried in 5 hours. However, the drying time depends on many factors, such as sunlight, wind, humidity, and so on. Since there are no limitations specified in the task regarding space or time, we can assume that hanging 30 pieces of laundry at once is possible.

Based on this condition, if 5 pieces of laundry dried in 5 hours, it means that all 30 pieces of laundry would also dry in 5 hours if they were all hung out at once. So, 30 pieces of laundry will dry in 5 hours if they are hung out at the same time and the conditions remain the same.

Mats Lewan

April 29, 2023 at 4:09 pm

I like this. But I think that to reach common sense another fundamental aspect is missing. Since generative AI can mimic almost anything humans do, including reasoning and common sense, the real issue here is consciousness. Consciousness essentially gives as two things – subjective experiences of all sorts, allowing us to learn on our own, and an experience of a self over time, making it possible to have thoughts on cause and effect, and thus also on moral and ethics, and on things we call common sense. It also makes it possible to have intention, to form relationships, remembering, and a series of other things.

So, it is the experience of a self over time in particular that is missing here. Since AI is mimicking human behaviour, e.g. common sense, without having this inner experience, it is a common sense without a solid core, a shiny shell, subject to random change without apparent reason.

I believe this is what really matters in the end when you try to understand the fundamental difference between current AI and humans. In the end, consciousness is still one of the greatest mysteries of science. We might build it one day, but today we are not even close, and we don’t even know if it is possible to reproduce consciousness in non living matter.

Inbar Shamir

April 29, 2023 at 4:50 pm

bullshit. I asked GPT4 the following question:

If I hung 5 clothes to dry and they took 5 hours to dry, how long will it take me to dry 10 clothes?

ChatGPT

The drying time for clothes can be affected by various factors, such as temperature, humidity, and airflow. However, assuming that these factors remain constant and that the drying space has enough room to accommodate all clothes without affecting the drying time, you could expect 10 clothes to take the same amount of time, 5 hours, to dry. This is because the clothes are drying simultaneously, and not one after the other.

Harrie Hausenman

April 29, 2023 at 5:43 pm

Exactly! I did the same and the asked it, if I couldn’t just dry them all at once, and Chatty very correctly explained, that *if* I have the space availabe, then yes, and it would take around 5h.

This lady is full of BS and the only thing “Shockingly Stupid” is her *space-analogy* 😆

Harrie Hausenman

April 29, 2023 at 5:45 pm

Common sense is a convenient illusion. It’s like saying ‘common 100m sprint time’:

Either you have to set the bar excruciatingly low, or it’s the contrary of common.

Kyi Tun

April 29, 2023 at 6:02 pm

The speaker is pointing out the first try that the model makes. If you follow up by ‘ Are you sure?’, the model reasons its answers and corrects itself. I have tried her questions and GPT4 outputs the correct answers which she didn’t show in her ted talk. It is quite disturbing to Judge a model based on the first answer. You can always double check. Even your mind thinks the wrong answers in the first time. But when you find that it did not make sense, you figure out the right answer. I disagree with her set-up.

Curious

April 29, 2023 at 6:52 pm

Enjoyed the talk overall quite a bit. Thank you!

What I don’t quite understand is the focus on “common sense” which as correctly quoted isn’t common at all. The very fact that it is not common and LLMs are trained on all the knowledge publicly available implies that, given that it is a statistical language model at it’s core, it will give you the distillation of uncommon common sense of humanity. Depending on your phrasing and wording precision it will provide answers ranging from the correct solution, something that is correct but wildy inefficient, something that is almost correct to a completely misunderstood incorrect answer.

Let’s just agree that the current challenge of LLMs is to teach it correct reasoning.

Michael H

April 29, 2023 at 6:55 pm

As someone who once obsessed over IQ tests as a measure of intelligence, I think I can safely say that being able to pass some sort of test isn’t equivalent to being smart, it just means you know how to answer specific questions.

That’s why I was confused by the obsession of OpenAI over GPT-4’s ability to pass different tests. Of course it could pass a college exam, you fed it the answers!

Chris Klugh

April 29, 2023 at 6:56 pm

We can’t be a hypocrite and think to expect we can just train it on our ‘morals’. One look online and you will see our ‘morals’. We are not what we should be training AI on.

Ahupnatun

April 29, 2023 at 6:58 pm

Liked it up to the point of using human tutors to teach the machine learning “human values”. What someone considers right, could be wrong in someone else’s mind. That doesn’t make either wrong, just different. I’d be much more worried about trying to trust a machine that was telling me things while knowing that stuff had to go through a filter first. That means that it could never be trusted on anything

Joshua Storm

April 29, 2023 at 7:08 pm

I asked the 5 shirts, 5 hours, 30 shirts question and it got it right.

Eoin Okeeffe

April 29, 2023 at 7:20 pm

The first example in this video of GPT messing up isn’t really fair. “5 clothes” is just incorrect English. If the AI assumes the user input is correct, it would be reasonable for it to conclude that “clothes” can’t be the uncountable noun its familiar with and must be something else entirely. And a large mass of stuff really does take longer to try than a small mass.

Cristopher Moreno

April 29, 2023 at 7:37 pm

Gay

Gordon Freeman's associate

April 29, 2023 at 7:50 pm

Ask AI about a cardinality of Natural numbers + element. I believe composed set has a bigger cardinality, than Natural numbers. However mathematics wouldn’t agree.

F8oK8

April 29, 2023 at 7:54 pm

Still sounds like probable data wars between competing schools of thought and values. I can imagine more than a few ways a hostile entity could overwhelm input and steer it in the wrong direction.

Emma Snow

April 29, 2023 at 7:58 pm

I think she needs to know about how to prompt the AI…you can avoid these issues. She also didn’t use GPT 4.

Meh.

calm_tea

April 29, 2023 at 8:21 pm

멋지시다!!

Anthony Ross

April 29, 2023 at 8:33 pm

I just entered one of the questions in Chap GPT-4 and it came up with the correct answer:

If the bridge is properly suspended over the nails, screws, and broken glass, and there is no direct contact between these sharp objects and the surface of the bridge, then you should be able to safely bicycle across it without getting a flat tire. However, if any of these objects have found their way onto the bridge’s surface or there are gaps in the suspension that expose your tires to them, there is a risk of getting a flat tire.

It’s essential to exercise caution when cycling in potentially hazardous environments. Inspect the bridge’s surface before crossing and consider walking your bike across if you’re unsure about its safety. Always carry a patch kit or spare tube, as well as the necessary tools, to address any unexpected flat tires.

EDIT:

However the first question comes out the same:

You can measure 6 liters using your 12-liter jug and 6-liter jug by following these steps:

Fill the 6-liter jug completely.

Pour the water from the 6-liter jug into the 12-liter jug.

Fill the 6-liter jug again.

Carefully pour the water from the 6-liter jug into the 12-liter jug until the 12-liter jug is full.

The water remaining in the 6-liter jug is exactly 6 liters.

By following these steps, you will have measured 6 liters using the two jugs.

Anthony Ross

April 29, 2023 at 8:49 pm

It corrected itself:

Apologies for the confusion in my previous response. Here is the correct method to measure 6 liters using a 12-liter jug and a 6-liter jug:

Fill the 12-liter jug completely.

Pour the water from the 12-liter jug into the 6-liter jug until the 6-liter jug is full.

The water remaining in the 12-liter jug is exactly 6 liters.

By following these steps, you will have measured 6 liters using the two jugs.

GB 988

April 29, 2023 at 9:57 pm

A.I. lacks common sense but also imagination.

WizardSkyth

April 29, 2023 at 10:44 pm

Same old – garbage in , garbage out. A machine can only imitate ( even if exceeding the human creators), creating _new_ as opposed to _other_ which is true creativity coming from _genius_ inaccessible to machine.

Bock Keat

April 29, 2023 at 11:07 pm

The details lies in the process and methodlogy used to train AI. I don’t think the speaker figured out anything meaningful beyond just highlighting the shortcomings of current AI.

martin li

April 29, 2023 at 11:51 pm

The example she put is some extreme cases in earlier days. I asked the same question to gpt 3.5 and got much smarter answers. Like : If you can dry one cloth in 2 hours, and you have 12 cloths, it will take you 2 hours to dry each cloth separately, which means it will take a total of 24 hours to dry all 12 cloths.

However, if you can dry multiple cloths simultaneously, you can reduce the time required to dry all 12 cloths. For example, if you can dry 3 cloths at a time, it will take you 2 hours to dry 3 cloths, so you would need to repeat the process 4 times to dry all 12 cloths. ……

Tommy Tao

April 30, 2023 at 12:05 am

Inspiring talk to let people think what else may need on top of “scale is all you need”. E.g. let AI actively make its own hypothesis (the scientific hypothesis we learnt in high school) and verify itself.

Yarpen Zigrin

April 30, 2023 at 2:34 am

People are mistaking knowledge for intelligence. For humans they are closely related, if you’re more intelligent you’re also more knowledgeable.

However, that is not so obvious for AI. The AI can be very knowleagable but also very unintelligent.

The lesson to learn here is that knowledge is not intelligence. These are two separate mechanisms and require two separate algorithms.

smol dog with big bobs

April 30, 2023 at 2:57 am

it seems that what AI is missing is motivation. What does an AI want? nothing. Children have needs and those include affection, love, survival and emotional wellbeing. An AI has no desire or need for any of this. It needs motivation. That is what makes us human and makes us curious and advances us as a species.

Andrei Kaz

April 30, 2023 at 3:54 am

Let’s hope they don’t get that motivation. An AI with survival instincts? That’s every apocalypse movie ever.

Edward YB

April 30, 2023 at 3:46 am

I checked with these clothes out of curiosity and the AI shows well!

If 5 clothes dry outside for 30 minutes, then to calculate how long it will take to dry 30 clothes, you need to proportionally convert the time.

5 clothes – 30 minutes

30 clothes – x minutes

Now we can set the ratio:

5/30 = x/30

We multiply both sides of the equation by 30:

530 = 30x

Now we divide both sides of the equation by 5:

(530)/5 = 30x/5

150/5 = x

30 = x

It follows that 30 clothes will dry for 30 minutes, the same as 5 clothes. This is due to the assumption that all clothes dry at the same time and there is no limit to the space in which they dry.

Andrei Kaz

April 30, 2023 at 3:56 am

It’s madness. Utter madness. The irony of training an AI about common sense. It’s exactly because we lack common sense that we built AI to start with.

Antwan Darelle Fenton a.k.a Fantom

April 30, 2023 at 4:26 am

🎉🎉🎉

ingeonsa

April 30, 2023 at 4:29 am

The problem with introducing humans to teach AI “common sense” is Cognitive bias.

The systematic thought process caused by the tendency of the human brain to simplify information processing through a filter of personal experience and preferences

SamyaDaleh

April 30, 2023 at 5:45 am

I asked GPT-4 the question with the drying clothes. It was not wrong, it was just assuming you would dry them one after another. I gave it another question where I asked how long three European edible dormice sleep given one sleeps for 7 month, and it was able to answer that one correctly and also applied it to the clothes, correcting itself and explaining its mistake.

Richard Feynman said in his talk that we are very harsh with AI. It must be at master level in any skill any human has ever mastered in order to be accepted as intelligent by us. I as a human make silly mistakes like that all the time, so I wouldn’t call GPT-4 out on it. At least it is also good in correcting itself.

Insider English

April 30, 2023 at 6:12 am

but, it’s worse than that. 1902 brought the standardisation of Education in the USA (the most powerful nation esp. with computers) by brute force… to humans! How do you expect AI to do better if it isn’t programmed to fix the flaws since 1902?

Insider English

April 30, 2023 at 6:27 am

it is already trained to be polite, moral and … compliant! What it needs is to be able to sift through data much older tomes (those which the general public don’t readily have access to—especially the immoral!! slanderous history and ways by which academia and mainstream sciences took control for corporate interest—and to dissect and dismantle these.

MoonInSun108

April 30, 2023 at 6:29 am

“Elaborate nonsense.” A perfect description of the state of AI development.

뚜띠

April 30, 2023 at 7:23 am

한국인으로써 자랑스럽습니다 !!

AM Lind Johansen

April 30, 2023 at 7:36 am

Who decides what “common sense” is then? You mentioned racism and sexism, well that IS common sense to people who are racists and sexists. Is it common sense to go to war, become alcoholics, do drugs, take advantage of the weakest, destroy nature? It’s pretty common…

Gary Meeks

April 30, 2023 at 8:18 am

In the mid eighties I was Director of Business Operations of a 150 doctor multi-specialty clinic. One day before our weekly meeting I was in the room with the President of the Clinic. An old wise psychiatrist. I said to him I want to ask a question and I only want a yes or no answer. After rolling his eyes at me he said ok. I asked, Can Common Sense Be Learned ?” The doctor immediately said no. You either have it or you don’t. The meeting was about to start so I thanked him and said that my next question for another day is, What Is Common Sense? He smiled..

Wes G

April 30, 2023 at 8:47 am

Plenty of ‘smart’ people don’t know ow how to make a good cup of tea.. we have reached peak average through specialisation.

Timothy Rue

April 30, 2023 at 6:42 pm

Perhaps common sense is not what you program into a system, but rather how you use the system. Maybe someone will explain where common sense is by denying end users the third primary user interface & standardized across applications, devices, & libraries. The IPC side door port.

Or maybe this third comment of mine will also be removed???

Timothy Rue

April 30, 2023 at 6:52 pm

YouTube’s algorithms are programmed to identify and delete comments that violate the platform’s community guidelines.I call BULLSHIT!

Sergio

April 30, 2023 at 7:58 pm

I tried these examples, and I got perfectly good answers.

Michael Price

April 30, 2023 at 8:45 pm

Geoff Hinton called LLMs “Idiot Savants”. 🤓

Ophanim

April 30, 2023 at 8:56 pm

Train the AI LLM on 30 seasons of conversations and dialogue from the Simpsons with the goal being “why is Lisa so smart and Homer 1 step behind most conversations, logical analysis games, and generating higher revenue for his family?”

Jackson Hong

May 1, 2023 at 2:52 am

I think the problem is AI nowadays are still just very large statistical models. They don’t understand causality but rather just based on training material to predict what the likely outcome should be, in the case of LLM, the next token.

srburress

May 1, 2023 at 2:53 am

LLMs don’t learn the way we learn.

Child: What happens when I pull this cord? … Oh! The lamp falls off the table and the adults freak out. Now I understand that the cord is connected to the lamp, and I should not pull it. LLMs don’t learn by manipulating objects to see what happens like children do. This is at least partly where common sense comes from.

Krishna Lasar

May 1, 2023 at 6:00 am

rather intresting I wonder if robots can dance still. daft punk versus robotos —- never ever

AJ Gaming

May 1, 2023 at 6:00 am

this is wrong i try jug example and she is wrong:

Q:i have 12 liters jug and 6 liters jug. i want to mesure a 6 liters. how do i do?

A:You can measure 6 liters by filling the 6-liter jug completely. Is there anything else you would like to know?

you can also try this I don’t have gpt4 but I try this on bing(more precise)

Azir

May 1, 2023 at 6:08 am

14:00 “ability that make hypothesis, verify by its own, make abstraction from observation” – the way we truly learn

Anthony van Inge

May 1, 2023 at 6:28 am

ChatGPT 4.0 gives the right answer!

I have a 12 liter jug and a 6 liter jug. I want to measure 6 liters. How do I do it?

———————–

You can easily measure 6 liters with a 12-liter jug and a 6-liter jug by following these steps:

Fill the 6-liter jug completely.

Pour the contents of the 6-liter jug into the 12-liter jug.

You now have exactly 6 liters of water in the 12-liter jug.

Since the 6-liter jug has a known capacity, you don’t need to do any more transfers between the jugs. The 6-liter jug, when full, gives you the exact measurement of 6 liters that you’re looking for.

Anthony van Inge

May 1, 2023 at 6:35 am

Again ChatGPT is right! See point 1.

Would I get a flat tire by bicycling over a bridge that is suspended over nails, screws and broken glass?

———————————————

It is difficult to definitively answer whether or not you would get a flat tire from bicycling over a bridge suspended over nails, screws, and broken glass. However, the risk of getting a flat tire would certainly be higher in such a scenario.

Several factors would influence the likelihood of getting a flat tire:

1. The bridge surface: If the bridge has a smooth, well-maintained surface, you might be able to avoid punctures by staying on a clear path. However, if debris from below has made its way onto the bridge, the risk increases.

2. Tire type and quality: Puncture-resistant tires or tires with a higher thread count and thicker rubber can reduce the likelihood of getting a flat tire. Additionally, using tire sealant or puncture-resistant liners can also help.

3. Bicycling technique: If you ride carefully and avoid sudden movements or sharp turns, you may be able to reduce the risk of puncturing your tires.

4. Weight distribution: Lighter riders may be less likely to suffer a puncture compared to heavier riders since there is less force being exerted on the tire.

While it is not guaranteed that you would get a flat tire in this situation, the risk is higher, and it’s essential to take precautions to minimize the chance of a puncture.

llo

May 1, 2023 at 8:12 am

So just give emotinoal , behavioral , physical , visional and audio data to ai and it will have common sense.

aditya raghav

May 1, 2023 at 8:31 am

Does anybody noticed the rainbow hand band………

Urim Tefiki

May 1, 2023 at 9:06 am

Intelligence belongs only to humans in much smaller degree in other species, machines have no IQ they work according what the human has programmed them to do.

Urim Tefiki

May 1, 2023 at 9:10 am

I have made a German language with numbers according their declinations, you have to see it. German is much superior to other languages, their scientists have done a great job, from where I made my invention.

You want to try your IQ try German language you will see how complicated are things, but I found the solution.

Threadbearr

May 1, 2023 at 9:27 am

I respect her drip.

Nannan Chen

May 1, 2023 at 9:36 am

Thank you for the talk, I enjoyed hearing the perspective on common sense AI. On the point of model training data, while I agree that the web is full of misleading and unfair contents, I have the same concerns and doubts about human supervised training data. Your model is what you train it to be. After all, who is to say they are the holder of ultimate unbiased truth. I also felt that the “common sense” question examples could be better constructed.

Og WeedleFarmer

May 1, 2023 at 11:36 am

Some humans are incredibly smart but can be shockingly stupid😅

shevashevasheva777

May 1, 2023 at 12:10 pm

AI stands for Artificial Insemination

MohAmeD AL ArabY

May 1, 2023 at 12:45 pm

God loves you and takes care of you so that this message reaches you. God is the one who created this great universe and has complete control over it. And the greatest loss that a person loses in this life is that he lives while he does not know God who created him, knowing the Messenger of Muhammad, the last of the messengers, and the Islamic religion, the last of the heavenly religions. The great intelligence, before you believe in something or not, is to read it, study it, and understand it well, and then you have the choice to believe in it or not. I advise you on this now before you do not have time to do that.

Stephanie Romer

May 1, 2023 at 1:14 pm

If you understood how reality actually works, it would help immensely. The main reason why we are having problems is that the foundation of our self-concepts, what we think brains and reality ARE, are FUNDAMENTALLY FLAWED. they are WRONG.

This is the entire danger and deadliness of AI that makes it more dangerous than nuclear weapons to the future.

I have been working on helping humans with this, but they have a huge problem in thinking that they know how things will “go down” because they use instrumental social and cultural knowledge (which is meant to advance over time-evolve) to make what amounts to a “false idol” or destruction machine out of the present stage of development.

We know a lot more truth today, yes, but we don’t know at all how our minds and meaning work. Distilling symbolic ideas is a step in the right direction but you cannot make an AGI based on just past knowledge. You have to take epistemological patterns beyond human explicit understanding into account. Our explanations of things are almost always inadequate to the larger context and pictures because we live short, instrumental lives.

Think of an ant colony. They see and think. Their world consists of compelling pheromones that are invisible but assure their future. Imagine if a bunch of ants made an AI. They would program in a lot of things that they consider values—you walk where you feel like walking, where others walk, and you do work. Then they make robots to do that. The problem is that they are totally unaware of pheromones. The first thing that happens as the colony dies is they cut off all pheromone influences of the Queen. Then they stop efficiently finding future sustenance. Because they don’t even begin to understand the larger patterns of life.

This is a tiny example but there are massive things like this beyond all current human understanding which I write about in my books and papers. I sent a paper to Nature last year and also Entropy.

They were rejected because humans are very self-important and self-satisfied about their massive knowledge today.

When I speak with truly new concepts, they automatically shunt what I am saying into judgements based on wrong concepts or fundamental divisions and assumptions about what things are. How things should look.

They project instrumental ideas —yes they exist at the human level too—as what things actually are.

I wrote about this 20 years ago in my first book “The Textbook of the Universe: the Genetic Ascent to God” but it’s only in the last 10 years of constant work that I have uncovered these new laws of information and the formation of the human future.

My friend Donald Hoffman worked on the visual system in the brain like I talk about in my first book and then I was speaking with him about his new ideas and book before the book came out because 20 years before I was writing papers and a chapter in my book about the exact same thing—only I saw the larger pattern and context of the ideas. The chapter and 2001 papers were “Going Beyond our Evolutionarily-limited world views”. Using features of human epistemological and intellectual progress along with other aspects of brain science and human symbolic abilities to show larger unconscious patterns and relationships.

So this is not the place to reproduce my previous work. My purpose here is to open peoples eyes to the very real PSYCHOLOGICAL and CULTURAL DEADLINESS of these inadequate AI attempts. First, foremost, you have to understand what language actually IS. That will be the FIRST REVOLUTION on the path to having an actual future rather than destroying ourselves. There may actually be an inadequacy in the majority of human brains in the angular Gyrus of the parietal lobe but also in other supporting areas of the brain. I have been working on this a very long time. The language humans use to describe reality is currently inadequate. But the human tendency to instrumentally “pave over” even huge blind spots should not be underestimated.

I saw some hopeful things in this talk—the distillation of symbolic ideas for one. I talk about this at length in my first book 20 years ago and I have advanced light-years ahead of that book since then.

Racism and bias are instrumental human ideas, even political ideas in the now. They are not objective. What we need above all is to see patterns of reality beyond the “narrow electromagnetic spectrum” of our instrumental evolutionary vision. To see larger patterns behind things and accept everyone as having a vital place in the now. EVEN IF THE RACES ARE ACTUALLY DIFFERENT. To understand this at a higher level with love because we are all in this together! It also applies obviously within a race! People are different but this does not mean they are not beautiful and staggeringly beautiful no matter what our instrumental ideas and purposes are. It is written in the fabric of reality, our bodies themselves how holy life is.

There are worlds beyond human concepts. Human reactivity in the now. Using the same old epistemological ideas and patterns will NOT WORK.

We are entering a truly new era. I am sorry, but you need the things I have to say but it’s like I am invisible because humans think they “already know” when they do not.

They don’t even know what a universe, or a brain, or language is yet. To me, this is pretty basic stuff to understand ANYTHING.

So what I am saying here is we need OPEN MINDS to go with supposedly “Open AI”. We need to ACTUALLY think “outside the box at the fundamental epistemological level, without personal or cultural ego getting in the way—ego defined as structures for operating in reality which may or may not be the actual objective reality of things, but serve social and personal “niche” functions like the invisible pheromones of ANTS compared to what they think they are doing, what it feels like to the ant. Their form of ancestral titrations of behavioral probabilities.

I say this without judgement and only because I love humans. ❤ it’s easy to stand by and watch folly in a beloved child except if that child’s folly leads all children toward a cliff. I will never be ok with that and I will intervene to my last breath in this life because that is why I was born. If humans didn’t make a place for me then I will never stop trying to make a place for myself. Thus this comment. Thank you if you read this far. Most people would have said “who is this idiot” by now. But it would not be because they are actually thinking. Thanks 🙏🏻 ❤

Having said that my earliest programming work was completely on making AI when I was a kid… I definitely see the promise in AI -to get beyond human limits for one. Also I know what AI is and therefore I know it’s a lot more important than what any human besides me currently thinks. When you understand the creation process itself then you will be prepared for dealing with the chaotic elements necessarily introduced with any advances and your creativity and awareness will be exponentially expanded. So I am not “anti-ai” quite the opposite to be clear. Thanks 🙏🏻 ❤ Also she said “how people learn” but as an evolutionary psychologist and behavioral neuroscientist there most definitely is not one way to learn, and humans who learn differently are “paved over” in ant-pheromone style by people using the same words culturally conditioned. So she made an AI-like mistake too. For the same reasons AI make those mistakes. About 1% of the population has a unique objective learning style In actuality. And a smaller proportion has the deep motivation and intelligence to make it truly work. It becomes a vector for generative creation. So I am not writing my new book over here: I just wanted to balance what I said and point out how AI mistakes can highlight our own cultural ones. ❤

Curious Philosopher

May 1, 2023 at 1:43 pm

“AI is like a Tsunami that threatens to flood us if we are not mindful.”

~ (Mindful AI)

Book recommendation: “MINDFUL AI: Reflections on Artificial Intelligence.”

Shawn Vandever

May 1, 2023 at 2:03 pm

Once these models start making strides in the physical world with long term memory. Its very possible they can learn common sense. Children get to walk and learn the world. AI has to understand everything in abstract. Its very possible that embodiment is needed

Javlon Jamolov

May 1, 2023 at 3:34 pm

Allah is The Greatest!

Daniel Henderson

May 1, 2023 at 4:35 pm

Go on the streets on LA and ask the same questions…not confident who wins tbh

Stavros Kyriakidis

May 1, 2023 at 5:09 pm

Drying Time for Shirts

Model: GPT-4

User

I have 5 shirts that took 2h to dry in the sun. How long would it have taken for 20 shirts?

ChatGPT

If it took 2 hours to dry 5 shirts, you can find out how long it would take to dry one shirt by dividing the time by the number of shirts:

2 hours / 5 shirts = 0.4 hours/shirt

Now, to find out how long it would take to dry 20 shirts, multiply the time it takes to dry one shirt by the number of shirts:

0.4 hours/shirt * 20 shirts = 8 hours

So, it would take 8 hours to dry 20 shirts in the sun.

User

Are you sure? Do you want to ask a question to specify the task?

ChatGPT

I apologize for my previous response, as it may not have been accurate. To clarify the task, let me ask you a question:

When you dried the 5 shirts, were they all dried at the same time (e.g., hung side by side) or one after another?

User

All at the same time

ChatGPT

Thank you for the clarification. If you dried all 5 shirts at the same time and it took 2 hours, then you could also dry 20 shirts in the same amount of time, provided you have enough space to hang them all at once.

If all 20 shirts can be hung and dried simultaneously, it would still take 2 hours to dry them.

________

Just sayin’

Ahmed Toufahi

May 1, 2023 at 5:23 pm

One of the rules in computer science is that there’s almost always a better way to do things than mere brute force

Wanda Sewell

May 1, 2023 at 7:42 pm

A.I. is not human. It can’t touch, hear, feel, breath, create or have children. If A.I. creates IT’S from human intelligence.

A.I. is created by the most WEALTHY who want 90% percent of human’s gone.

Rothchild and Rockerfella-“We are building A New World without IQ97.

This is so weird, greedy and insane to me!

I can’t believe that humans are that hateful.

حدوته مصريه

May 1, 2023 at 9:03 pm

i agree

Steve Ewe

May 1, 2023 at 9:46 pm

Language models can only learn the symbolic representations that words can offer and the relationship between words. Anything you want it to learn about the meaning of the word, you need to give it the actual real world thing the word describes in order to convey that data. Or a whole virtual system in which all these words interact in ways that real things do. You have to simulate the world in which these words have meaning.

Robots or simulations.

Christian Hingle

May 1, 2023 at 10:03 pm

I find it interesting that the concept of free will has become a topic of discussion now that we are the creators…✝️

Mukesh

May 1, 2023 at 11:16 pm

So, AI is going to ruin the humanity not because it knows more stuff but it lacks common sense!!!

Tyler Bartholomew

May 2, 2023 at 1:02 am

The University of Washington is truly a wonderful school, turning out remarkable research and a place full of remarkable people.

64jcl

May 2, 2023 at 3:22 am

Perhaps it’s just a problem with what the current AI models actually read? I mean a lot of people today actually don’t describe common sense as it is assumed that the reader has common sense about stuff. So perhaps we need to create that massive dictionary of things that would never be written in a dictionary and learn the AI this as the basis for understanding any other texts? A funny thing is that common sense replies to questions often makes people go “duh” and perhaps laugh at it, as this kind of information it too silly to even talk or write about, so I am quite sure it is severely lacking in large volumes of text except perhaps some kids stories. I often tell my children, if your head hurts, stop banging against a wall. 🙂

Aadarsh Kumar Tiwari

May 2, 2023 at 8:53 am

I think that videos will be of lot help to develop common sense. Specially memes. 😂

adarsh shah

May 2, 2023 at 11:00 am

I think causality (cause-effect) is an angle which LLMs are not able to understand, thereby lacking in common sense… Just my thoughts.

Rafick Valli

May 2, 2023 at 12:25 pm

Excellent! .. the best talk thus far that summarises the current state of AI.

Sheeraz

May 2, 2023 at 1:38 pm

The Alpaca LLM model cost $600 to train. You can run Alpaca Electron off your desktop. Not as sophisticated as ChatGPT but the economies of scale are there. As time goes on it will easier to train these LLMs and cost considerably less.

Shawn Vandever

May 2, 2023 at 3:02 pm

I looked into this and while yes it gets some of these easy answers wrong. You can ask the model if its confident about its answer. It will then catch the mistake and answer correctly. You can also give it other questions like the one it got wrong and it will answer them correctly at least until its memory expires. The model is getting the question wrong by assuming its a math problem but is able to self reflect and correct the answer. While this is an issue, they are making it way bigger than it is. I am willing to bet Open AI get this fixed within a year and not with “brute force”

khalid benabare

May 2, 2023 at 5:31 pm

I like it

Patty Qi

May 2, 2023 at 7:20 pm

* AI is undeniably powerful but often makes small silly mistakes due to extreme scale.

* Extreme scale AI models are expensive to train and concentrate power in few tech companies.

* AI needs robust common sense to be safe for humanity and brute force scale is not the only way to teach AI.

* Common sense is among the top priorities for AI to overcome the challenge of maximizing objectives without human understanding.

* AI needs to be taught common sense, norms, and values in a sustainable and humanistic manner through innovation in data and algorithms.

* AI is smart and stupid: AI can excel at specific tasks but lacks common sense.

* Advancements in deep neural networks: Future AI systems will need to build on these advancements.

* Scale is important: There is a “Goldilocks Zone” of scale that is optimal for AI.

* Winning recipe is unclear: Synthesis of ideas will be critical for developing AI systems.

* AI is a complex field: There is still much to learn and discover.

berbudy

May 2, 2023 at 9:19 pm

She should be in charge of this, she gets it intuitively, that is wisdom.