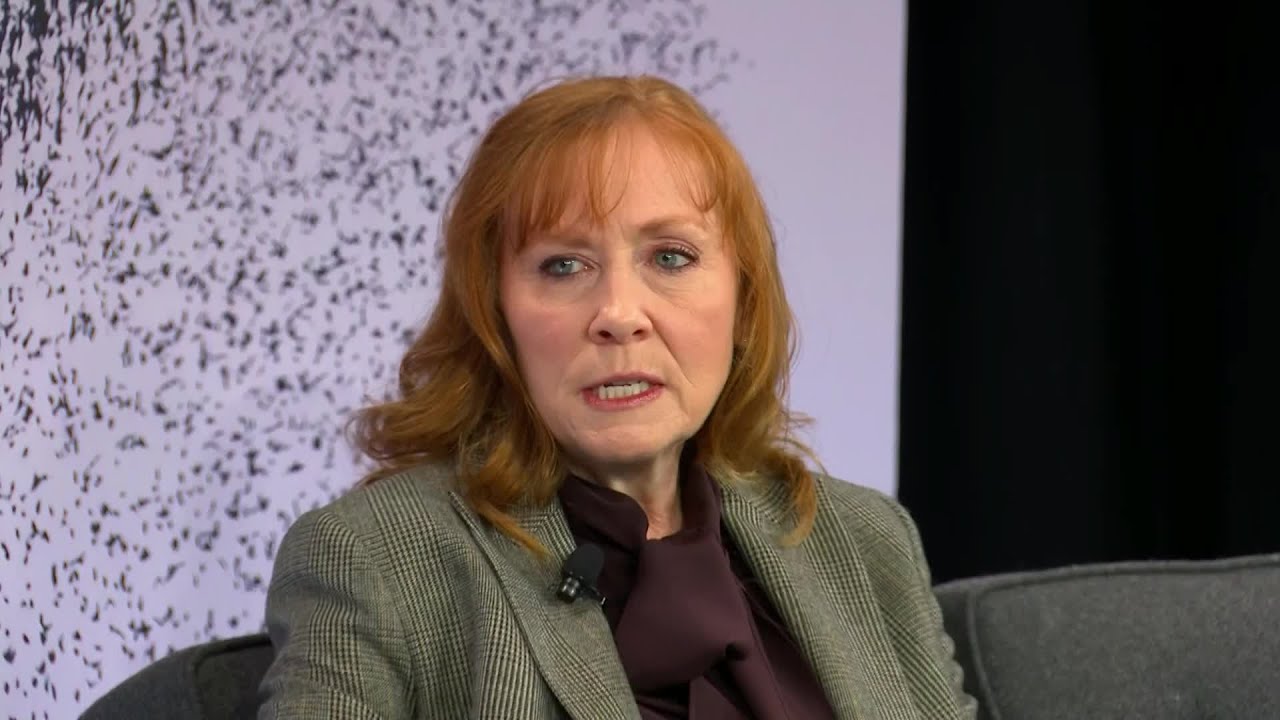

Former Google CEO and Schmidt Futures co-founder Eric Schmidt joins Emily Chang for a deep conversation on the growing role of AI in society, how limits should be set on AI before it gets out of hand and regulating big tech.

——–

Like this video? Subscribe to Bloomberg Technology on YouTube:

Watch the latest full episodes of “Bloomberg Technology” with Emily Chang here:

Get the latest in tech from Silicon Valley and around the world here:

Connect with us on…

Twitter:

Facebook:

Instagram:

Your book tells us that we are entering a new epic The Age of

A.I. and yet the world seems eerily calm about this big

transition. Do you think most people just don’t understand the

gravity of what is happening at this moment.

We wrote the book because precisely we don’t think people

understand what this will do to society. Both the good and the

bad. And as a prologue what I would tell you is most people when

we talk about A.I. or not computer scientists think of it in

terms of killer robots. And that is very much not what we’re

talking about.

Dr. Kissinger talks a great deal about the transition from the

age of faith to the age of reason hundreds of years ago where

all of a sudden after the dark ages the notion of us having our

own ability to reason and think and have points of view and

argue them was a new concept. It became the definition of what

humans could do because no other animals couldn’t do it. They

couldn’t reason through the situation. We believe that the

development of these A.I. partners to call them something will

in fact usher a new age an age of A.I. which will be both

extraordinarily powerful but also extraordinarily uncomfortable

because we’re not used to having as partners intelligence.

That’s not human.

Now the potential as you say it’s amazing and it’s also

terrifying. You have said that A.I. could help us better teach

one million children. It could also tell us how to kill one

million people. Should we be excited or should we be terrified.

Well I think the correct answer is both. And the reason you

should be excited is can you imagine solving the diseases that

have vivid bedeviled humans for thousands of years in the next

decade or two because the developments in A.I. and synthetic

biology.

Can you imagine the health benefits the economic benefits of

efficiency. The overall benefits from technology are well

understood. The new companies the wealth created. Many of your

viewers will want to be part of that.

On the other hand you should be terrified that these systems

which are born around objective functions that are highly

optimized able will be optimized for the wrong thing. I’ll give

you an example. You have a kid. The kid’s best friend is one of

these objects in the presence of a bear or a toy or something.

And all of a sudden the toy gets the instruction from its maker

that it should start hawking some product because there’s an

advertising thing and all of a sudden you discover your kid is

addicted to licorice because then Bear told him to do it. I mean

these are the kind of scenarios and that’s intended to be a mild

one that we really have to think how are we going to handle

this. Are they going to require new forms of regulation.

Is this something where the computer industry can get it right

without regulation.

But this is coming. It’s also going to affect our national

security and the way we do diplomacy the way we run our

elections the way we deal with this information. I’m glad you

mentioned children because as a parent I want to know what this

means for my kids and their kids.

You talk about you know talking to robots learning from robots

befriending A.I. Will our children perform preferred digital

friends over real ones. And what are the consequences of that.

But the general question is are humans sufficiently malleable

that they will ultimately decide that the digital friends in the

digital world is more satisfying.

I don’t know about you. Your mom. You have enormous

responsibilities. Are raising a family is really hard. Wouldn’t

it be easy for you to just take take the time off put yourself

at Oculus on and live in your metaverse and just have great fun

right. Will. Will the arrival of these digital worlds and new

digital partners cause us to read essentially retrench into what

it means to be human. Or will it change the definition of

humanity in such a way. That’s not necessarily so good.

Will it also change our conception of reality for example

generated by a ISE becoming so much more common a poetry a guy

writing a music deep fakes. What are the consequences if if if

the line between fake and real becomes indistinguishable. If we

don’t do anything you’re going to have this explosion of

software which will be open source which will allow you to

create disinformation and misinformation campaigns that are

profoundly effective. And we know that exposure to even known

falsehoods especially if it’s in video can change behavior. So

it’s really important that we understand how far we want to take

this that technology is going to get to the point where you can

have a massive misinformation or disinformation campaign and you

can target individuals. And furthermore the social networks

remember are organized around revenue more revenue from

engagement more engagement from outrage. So you have fake stuff.

You have outrage and so forth. Are you surprised that people are

not upset. You shouldn’t be. The system is geared to produce

this.

Your book is calling for a A.I. arms control and I’m curious if

you believe that A.I. is developing at a rate that it will get

too far ahead of us. And could it become impossible to regulate.

I thought a lot about this. You know you could imagine a

scenario where the A.I. and this is just an imagination where

the A.I. is both eventually becomes both general intelligence

and it also Gabe becomes able to write its own code. Now this is

science fiction today. More reason a shorter term statement

would be that there’s going to be the development of more

generally AI and these systems are going to be powerful and

dangerous. An example is as you said you could ask this system

tell me how to kill a million people who are of a different race

than me. And it might actually try to answer that question.

Those systems are going to have to be protected and secured for

the better for the better of all of us. And furthermore they’re

not going to be that many of them because they’re gonna be

incredibly expensive to build. That’s a proliferation problem.

We’re going to work in the same sense that Dr. Kissinger in the

1950s worked full on nuclear proliferation and he talks about

how that was done. We need to actually now find a way to deal

with and a dialogue and a set of principles around software

proliferation where the proliferation can hurt millions of

people.

And yet you say that A.I. is gonna be harder to regulate than

nuclear weapons. And we see just how hard it is to get any kind

of law passed. And I wonder are we going to see a new and a

nuclear event a Hiroshima and Nagasaki before we can come to

agreement on these terms or realize the urgency of the need to

do so.

That is incredibly perceptive on your part.

It seems to me that we want to avoid having to blow up the bomb

in order to then regulate.

And so as we get closer and closer to these danger points. The

one I mentioned I’ll give you another one. If you go back to Dr.

Strangelove the movie they had a situation where they had launch

on warning. They basically had a bomb that was guaranteed to

take out the other side even though the other side hadn’t

launched it in the first place. That’s incredibly destabilising.

But now let me explain why we might end up in that situation.

These systems because of the compression of time may have to

make decisions faster than humans can. And yet the systems are

imprecise. They’re not predictable and they’re still learning. I

don’t want to stake the future of the human race on a system

that is that unreliable. We’ve got to come up with some limits

for some of these behaviors in particular in terms of automatic

response. Eric you’ve got a chapter on global networks and talk

about the influence that private companies have on users

populations bigger than countries bigger than governments in

countries around the world. Do you think that companies like

Google like Facebook have too much power. And if so how should

they be reined in.

Well I’ve never been in favor of regulation of the tech industry

because the regulation is always either too soon or too late.

What I would rather have is sort of proper industrial restraint

and essentially the kind of well managed corporations that I

hope these companies will become.

Looking at what’s going on it’s clear that Facebook for example

went a little too far on the revenue side and not enough on the

judgment side. And you can see that from the Facebook leaks that

have been occurring.

The problem here is that most of the people who said let’s break

these companies up the breaking them up is not going to solve

the problem. It’s really an incentive issue for what they’re

trying to do. And I would tell you in defense of these companies

the greatest export of America are our people and values through

these companies. Because when Google shows up for example in an

Arab country or in a country a developing country it brings

American values American liberal values in particular in the

treatment of employees and so forth to a place that doesn’t have

to be careful not to to essentially kill the whole thing. Now

we’re going to get to Facebook. But I want to start with Google

because your co-author Henry Kissinger you’ve shared that when

you first met. He told you he was worried that Google would

destroy the world. And now that it was a threat to him he said

it was a threat to humanity. Threat to humanity. OK now that

you’ve left Google I guess I wonder how you ponder that

question. Do you believe that Google is a threat to humanity

today.

I do not. And I didn’t agree with him at the time. His concern

which he’s expressed many times is that a private company run

even by the best of people is not where such extraordinary

responsibility should lie. It should properly lie in the

democracies or the non democracies and their governments. And

what we’ve seen since his visit 15 years ago or so is that

governments are stepping up and they’re pushing back on some of

the things they don’t like. Many countries are quite upset about

American values and hegemony. So I think that the export of

American values and then the sort of the globalization of the

principles of the tech industry is on balance net positive. But

there have been a number of cases where people overreached. The

most recent being Facebook. Exactly. That was my next question.

Do you think Facebook is a threat to humanity based on what we

have learned especially in the last 12 weeks.

The disturbing thing is that people have said a long time ago

that Facebook was doing this but people said well they didn’t

understand or they didn’t measure it. And what we learned in

those disclosures is that these company and Facebook in

particular knew what it was doing. And that’s pretty concerning.

And I’ll tell you in general of as I’m from this industry that

the tech companies know who their customers are. They know what

they’re doing internally. They may not release it to the public

but they do know. And so it’s important for them to stand up and

say I’m proud of what we did and we did it for this reason. And

then they should be appropriately reviewed by the constituencies

that it’s very very easy to get confused as to what your goal

is.

In my case I was fortunate to work with Larry and Sergei who

really did have a higher principle mission at the goal and that

really helped us.

Now Mark Zuckerberg is making this big bet on the metaverse. How

big an all encompassing. Do you think the metaverse will be. And

do we really want Facebook to own that big a piece of it.

Well the metaverse of course is a term that was coined in 1992

and it means different things to different people. I believe

what he is referring to is distributed and untrustworthy

networks that are cryptographic we signed that are the next

generation of web services. And he wants to build worlds that

are interactive on top of that. I think that is a great goal.

It’s also not primarily products that they have built so far.

There is a burgeoning set of startups which are busy building

this as well. And I look forward to the competition between the

Facebook model of a multiverse and the startups model of the

multiverse. And by the way that competition is how we all win.

Right. Typically by the way the incumbent is given quite a scare

by the startups and sometimes the startups grow into the next

big company. And I look forward to some variant of that.

Well let’s talk about Google then. Do you think Google does or

should have a play in this multiverse. And if not what what

should and will the next big innovation be.

I don’t I don’t know how to speculate on Google’s future because

I’m not part of the management team anymore except I think it

will do well of it’s well-run. Center is a fantastic successor

to me. Look at the performance of the company on every measure.

The Google model is different. Remember its information and

search and question and answering and giving you better answers.

My guess is that the leadership will be in other places in the

multiverse. But I don’t really know.

Let’s end then on Tick Tock because you talked about the

upstarts that are you know levying these challenges to the

incumbents. Tick Tock has these like many tech companies these

powerful algorithms that are very controversial. China may well

have insight into these these algorithms. And I’m curious if

you’re concerned about the rising power of ticktock and what you

make of it.

Well Tick Tock is super impressive and this group out of China

figured out a way to build a new kind of entertainment. And they

also developed a new A.I. algorithm. The traditional social

network algorithms were focused on essentially who your friends

are. And to summarize a complicated answer. Tick tock algorithms

use your preferences and the world you choose to live in as

opposed to your friends to make similar recommendations. And

they’ve done it super well. So now they’re they’re really

driving a new form of entertainment. That’s really amazing. I

don’t mind that at all. What I do mind is that since it’s a

Chinese company you can imagine that the Chinese government may

start asking questions that in America we might think are

inappropriate. So the Chinese company might say give me

information about this person or let’s modify what you’re doing

for this political view. And I don’t think that’s a very good

American value. That’s got to get addressed. Thirty seconds

Eric. Are you worried that China is outpacing the US on

innovation or not. Well we we know that China is outpacing in

some markets but not in all. America needs an innovation

strategy to continue to be focused on synthetic biology. Quantum

A.I. and so forth. Lots of money lots of investment. And we can

win.

Science & Technology4 years ago

Science & Technology4 years ago

CNET4 years ago

CNET4 years ago

Wired6 years ago

Wired6 years ago

People & Blogs3 years ago

People & Blogs3 years ago

Wired6 years ago

Wired6 years ago

Wired6 years ago

Wired6 years ago

Wired6 years ago

Wired6 years ago

CNET5 years ago

CNET5 years ago

AJ Joshi

November 5, 2021 at 3:09 pm

6:32 AI is already to far ahead of us, regulation is needed, now, but time will show you, the regulators will abuse their own powers.

Derty Grows-it

November 5, 2021 at 3:16 pm

They are hiding comments here, literally it says 11 comments but yet 1 is only approved.

And they are all TRUE comments, says a lot about the social media hiding comments on twitter youtube and facebook pages of “mainstreaM” news.,. they cannot handle people proving them wrong so they had big tech make a 2nd comment box to hide them but make them appear to post to the user (i am smarter than them and know its not posted)

If you click SORTNEW comments, all 10 show up… and all are true no bad words.. says a lot about this naive approval person at bloomberg.

Sir Derty ✓

November 5, 2021 at 3:11 pm

Stop hiding truthful comments.

Grow up Bloomberg and MSNBC, and stop censorshipping comments that debunk your videos

Sir Derty ✓

November 5, 2021 at 3:12 pm

Meta is not even new or innovative.. facebook is an overhyped nonsense, this is why they changed the name.. the fecesbook name lost trust and so has meta.

Bye bye zuck, you were implanted just like biden was, by the elite advertisers, not the people. We want you gone from society.

Sir Derty ✓

November 5, 2021 at 3:12 pm

clowns dont even know the same comments can be reposted as a reply.. I win

Your censorship is easily tricked and free speech goes through if you are smart enough to figure out their censorshipping method to hide reality and truth comments.

Sir Derty ✓

November 5, 2021 at 3:12 pm

Bloomberg not approving comments that are legit and proving this is a bit naive.

Sir Derty ✓

November 5, 2021 at 3:13 pm

Stop erasing comments

Why did you not approve 5 normal factual comments?

Only on the mainstream msnbc bloomberg and nbc channels, they hide truth and millions of comments from debunking their videos.

Derty Grows-it

November 5, 2021 at 3:14 pm

They are using AI to remove factual comments and not approve people speech

of those who debunks Bloomberg and MSNBC (biden A-kissers, who cannot handle being proven wrong)

Derty Grows-it

November 5, 2021 at 3:14 pm

Imagine being the pathetic twerp that approves comments she only likes at Bloomberg.. aka you reading this

Derty Grows-it

November 5, 2021 at 3:16 pm

They are hiding comments here, literally it says 11 comments but yet 1 is only approved.

And they are all TRUE comments, says a lot about the social media hiding comments on twitter youtube and facebook pages of “mainstreaM” news.,. they cannot handle people proving them wrong so they had big tech make a 2nd comment box to hide them but make them appear to post to the user (i am smarter than them and know its not posted)

Derty Grows-it

November 5, 2021 at 3:17 pm

Pathetic censorship by bloomberg on this video… the elites cannot handle being proven wrong. Losers.

Just like the person approving the coments for bloomberg ahahaha

Derty Grows-it

November 5, 2021 at 3:17 pm

Imagine making a comment box, but your boss wants you to not approve 90 percent of the comments because they prove bloomberg lies.

Derty Grows-it

November 5, 2021 at 3:21 pm

wow 14 comments and you approved 1 of 14.. says a lot. Pathetic youtube and bloomberg censorshippers.. grow a pair and stop your illegal shadowbanning of comments without informing the user on a PUBLIC social media funded by the government. Yes fact.

Quetzal Bird

November 5, 2021 at 3:23 pm

Humanity should push for AI to be public and not private.

Derty Grows-it

November 5, 2021 at 3:26 pm

Yes just like internet and social media.. it should be public because its funded by government groups now, to watch us.

Derty Grows-it

November 5, 2021 at 3:26 pm

Pathetic censorship by bloomberg on this video… the elites cannot handle being proven wrong. Losers.

Just like the person approving the comments for bloomberg ahahaha

MTAS

November 5, 2021 at 6:30 pm

Blockchain

Shivendra singh

November 5, 2021 at 4:10 pm

what is the name of the book. pls some one answer me

Clayton Albachten

November 5, 2021 at 5:29 pm

The Age of AI @ 7:52

Shivendra singh

November 5, 2021 at 7:43 pm

@Clayton Albachten thanks brother

Zergar

November 5, 2021 at 6:00 pm

5:30 “and this is just an imagination” uh… sorry Eric we already have openai’s gpt-3 and codex models trained to write code with the help of human input. Its only a short time before we just program a bit further so that human intervention isn’t needed.

PROMOD SINHA

November 5, 2021 at 7:40 pm

Why are you not uploading the complete show ? Why only videos in bits and pieces ?

Right Here, Right Now

November 5, 2021 at 8:17 pm

Lies